Shadow and the future of Cloud Computing

Introduction: The end of Moore’s Law

The Moore’s Law will soon hit a wall. Or perhaps not.

But it does not matter as we have entered, for more than a decade, into a zone of diminished returns. Twenty years ago, things were simple. Thanks to a continuous progression of frequency and efficiency, computer processing power doubled every two years. In 1983 my personal computer was an Apple II with a mere 1MHz 8 bit CPU, in 1994 it was a 90MHz 32 bit Pentium and in 2004 a 2GHz 64 bit Athlon.

But the frequency progression came to an halt. Twelve years later our desktop CPU cannot easily sustain running at 4GHz. Efficiency also came to an halt. Even a very wide CPU, as the Intel Core, can only process 1.5 instruction per cycle on average.

The industry transitioned to multi-cores. Now even phone CPUs are at least 2-cores. But desktop CPUs seem to have hit a 4 core ceil. The reason? Except in very specific cases, it is very hard to develop an efficient multi-threaded application.

So what now? Some predict the advent of “Elastic compute cloud cores”, which is a neat name for “Hardware as a Service” (HaaS). And that’s what I will discuss in this article, through the prism of Blade a young French startup that claims to have achieved some breakthroughs in the field of cloud gaming!

Cloud Gaming?

Cloud Gaming is a very peculiar subset of HaaS. Instead of running your video game on your home console or tablet, it is run on a distant server, in the “cloud” and the resulting frames are streamed to you. Your device is, thus, only used for inputs and display.

Two of the most famous services are Geforce Now from NVIDIA and Playstation Now from Sony.

But cloud gaming is also one of the most difficult area of cloud computing. Games require a tremendous amount of processing power and more specifically, they require to run on a powerful GPU. And last but not least comes the latency. In order to be playable, a game requires a very low latency from the moment you input your orders, to the moment the result is displayed on the screen. As Internet was not designed with low latencies in mind, this aspect is not easily tamed.

The two services mentioned above almost achieve to solve these problems. Almost…

Playstation Now will run only ancient games, requiring less computational power. And Geforce Now is capped to 1080p. Plus, according to reviews, it achieves latencies in the region of 150ms. Quite good: the games are playable. But this small latency can render the game a bit jerky if you are as attentive as some gamers affirm they are.

Enters Blade

Blade is a young French startup claiming that, thanks to its brand new patented technologies, cloud gaming is now a problem solved! (Note though, that those patents are still pending and not yet public).

If true, their most impressive achievement is the latency induced by their solution: less than 16ms according to their website!

Concerning this latency thing

Let me define what “latency” refers to. It is the time spent between your inputs (ie. a button pressed on the controller) to their effect on the image displayed on the screen.

If your gaming system is a home console, such as a Xbox or a Playstation, the latency is the sum of the time for your input to be interpreted by the software (negligible), the time spent to render the resulting 3D image, then the time spent to send it to the TV (negligible).

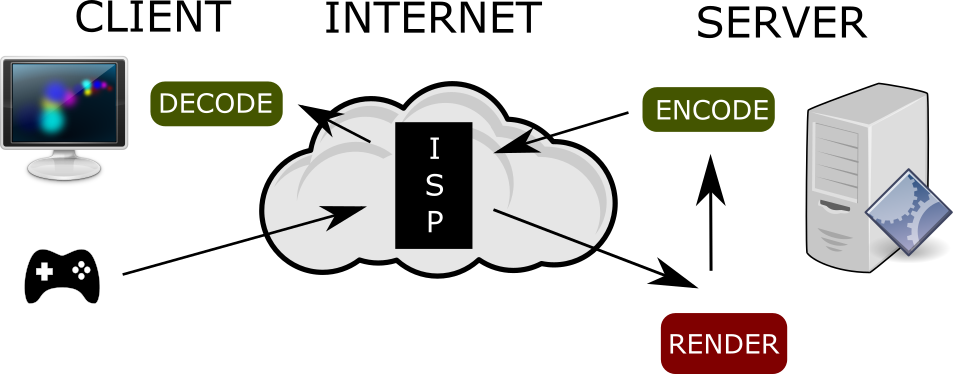

But if you’re playing on a distant server, things are not so simple.

First, the data corresponding to your input travels to the server. It goes through your ISP then many routers and servers before arriving to its destination. Internet was not designed as a low latency network…

Then, just as on a home console, the software computes the corresponding image.

But before being sent back, it has to be compressed or the bandwidth requirement to send the stream of images would be unreasonable…

Then back to home. With more or less the same number of routers and severs laying on the way.

Then… the image has to be decompressed! Before finally being displayed on the screen.

Sources of latencies in cloud gaming

Sources of latencies in cloud gaming

Blade’s answers to latency

A few days ago, I was invited at Blade, were I could ask many questions in order to try to understand how they tackle these problems. I will explain my understanding of their technology and what I extrapolate. Because of course Blade did not share all their secrets, so I have to try to fill the blanks…

The network

Blade will require its first customers to have fiber. Cable subscribers will come second. But there will be no support for xDSL users. And over 4G? I don’t know, but such mobile access does not appear to be their priority right now. Fiber offers very low latencies compared to DSL, so it helps a lot.

But they are also restricting their very first user base to France. That allowed them to deal with the four major internet providers in the country and to directly link their network to those ISPs’. This limits the number of hops required for data to travel from most French subscribers to Blade’s servers.

They also claim to have some patents pending concerning the network side of thing, but I could not gather much info there. As it is far from my field of expertise, I will not try to guess what those patents could cover.

The video compression

I was eagerly waiting answers for about video compression, as a plain H264 encoding is not adapted to low latency streaming. Indeed, the main purpose of H264, when it was designed, was to compress movies. In this case it does not matter much if, in order to achieve the best quality/bitrate ratio, the coder has to bufferize many frames, producing 100s ms of latency.

In that field too, Blade claims yet undisclosed pending patents. They told me were using an heavily tuned H264 coder. So much tuned that most hardware decoders are not flexible enough to handle the video.

I also can imagine that they use an open-sourced low latency audio codec. For instance, OPUS can go as low as 2.5ms.

In order to decode their stream in the best condition, they will also sell a small “box”: the Shadow. Its size is comparable to a Raspberry Pi and was designed by Blade, using off the shelf components: no custom ASIC or FPGA here. The Shadow is powered by Linux and connects to the stream as soon as it has booted. This ways user never see the actual OS and is given the illusion to use a Windows box: the distant computer.

A software client is also available on Linux, Android and Windows. But the 16ms latency will only be sustained on the Shadow. As a matter of fact, buying the Shadow will be mandatory to their subscribers!

The server

The server’s hardware is also crucial. If a game runs at 60fps on it, a frame will take 16ms to be computed. Blade would then miss its target of 16ms for the whole latency. Thus, the hardware has to be fast enough to run your game way faster than 60fps! It has to run it at maybe 120 or even 140fps!

As on a gaming computer the most crucial element is the GPU, Blade was not shy and its servers are equipped with the latest Geforce 1080! More, each user gets the full benefit of a GPU: they are not shared between users as on competing solutions!

The magic behind the curtain allows to instantiate a virtual gaming machine on the fly, consisting of a virtualized CPU and its main memory, a networked storage hosting the user’s private data, and a physical GPU for the user’s sole usage.

Actually, as a customer, you rent such a virtual machine and the server will instantiate it when you need it. Your data are also kept on Blade’s servers and are, of course, persistent.

The demos

I admit that I have limited knowledge in the field of networking and virtualization. So, although Blade’s developers could provide me convincing answers about their pretension to keep latency below 16ms, I was waiting for the demo to form my opinion.

They currently have two demos in place. The first one is involving two cheap netbooks. One is running Blade’s solution, the other one is the plain netbook. The second demo consists of playing Overwatch on their Shadow hardware.

On the netbooks

The goal of this demo is to use the two netbooks side by side.

The user should not notice any difference on light works, such as handling local files using explorer or checking mails.

On this occasion I managed to guess which computer was displaying the stream from the cloud. By moving the scroll like a crazy, I could detect a few missed frames. Admittedly, this is not a representative use case: I actively tried to provoke those lags.

Next, they launched Photoshop. A gaussian blur filter was to be applied on a hundreds megapixel photo. Of course, the real netbook struggled, while the (quite demanding) task was way faster on the distant computer.

On the plus side, the lag was low enough that I could not notice it when moving the mouse pointer and going through the menus. On the minus side, I could again notice some lags and some compression artifacts when I did clicked undo/redo. This is a more realistic use case than what I did before to provoke lags. Indeed, on a powerful computer with plenty of RAM, undoing (or going back in history) should be near instantaneous. When working on my photos, it is part of my workflow to go back and forward to evaluate the effect of the various filters I happen to apply.

Finally, I ran the latest Futuremark’s benchmark on both machines. Of course score of the “true netbook” was pitiful while the one of the cloud computer was stellar!

But, once again, I could spot the difference. In the corners, there where some compression artifacts (lightly blurred blocks) appearing on the streamed video.

Bear in mind that, although the cloud computer could not be 100% undistinguishable from a true local computer, the demo was convincing enough. And deported heavy applications such as Photoshop are quite usable! As I know a thing or two about video compression, I knew exactly how to stress their video encoder and where to look for the result. It is doubtful that an average user would spot the difference.

On the Shadow

Remember that Blade developed a custom hardware. As they are working full time on it, they also told me that the software solution running on the netbook was not up to date. Thus I was expecting an even better experience on the Shadow!

This time, the demo consisted of playing a game of Overwatch. FPS are the most demanding games so this is quite pertinent. As before, I tried to provoke artifacts by jumping, turning around and teleporting like a crazy. But this time I could not spot any!

As a matter of fact, I could not feel any difference with playing on my own computer! The graphic settings where 1080p / Ultra.

This time I was 100% convinced! 🙂

So much that I unplugged the Ethernet cable to verify that the game was indeed streamed 😀

The future of cloud gaming?

Although I cannot certify that the latencies I experienced where indeed lower than 16ms, Blade’s devs could provide me credible answers to my questions and I was convinced by the actual experience. On their hardware device at least.

I’m quite sure that they’re technologically onto something.

I’m less sure about what they unveiled concerning their business model.

They plan to target hardcore gamers and sell them a monthly subscription to their service. The price is still to be disclosed.

Blade will also require their customer to buy their hardware, including a Windows 10 license that will run on the cloud computer instance. Thus expect something around 200€.

And don’t forget that all you’ll get is an access to a cloud-computer: gamers will still have to buy their games. It is not a “Netflix-like” kind of subscription, offering a large library of games to be streamed.

I think that’s a large amount of money. Even if the gaming experience is premium compared to the way cheaper competing solution.

But investors think to believe in them, so I shall be wrong. Just wait and see…

The future of cloud computing?

If have my doubts about the viability of their announced business plan focusing on cloud gaming, their technical solution opens a window on something else. Remember the demo when I tried Photoshop? It was quite usable indeed!

Nowadays, customers privilege light and practical computing devices. Unfortunately, those are too weak to manage heavy computing tasks. And they will stay too weak for the years to come because, as you may remember, the Moore’s Law is dead!

Enter cloud computing. If, in time of need, those customers could easily instantiate a cloud computer such as the one I ran Photoshop onto, the problem would be solved!

Of course, the pricing could not be the one Blade presented me. Maybe a price depending of the usage? And there is the problem of Windows license which is tied to the instantiated personal cloud-computer…

But the perspective are quite fascinating!