Intel 80386, a revolutionary CPU

What is the most important CPU that Intel ever produced?

- The Pentium? The chip was no slouch, and was also a brand that was heavily marketed by Intel, putting the firm on a map for many computer illiterate consumers.

- The Pentium Pro? It could rival the RISC chips of its time and was the core architecture of all Intel processors for around ten years.

- The 8088? It won the IBM PC market, paving the way for a bright future to its descendants.

In my opinion, there is no doubt that the crown has to go to the Intel 80386. It is indeed the first 32-bit CPU in the x86 family. But it is way more than that.

Despite being less “elegant” than its nemesis the Motorola 68030, and less powerful that the countless RISC chips that began to emerge around the same time period, I will dare to say that the 80386 is not only the most important CPU Intel ever produced, but also one of the most important CPU ever produced, period. It triggered a revolution.

Here is why.

The birth of the 80386

Designing the 80386

The i386 was not planned nor wanted by Intel.

In the late 70s, the most important project at Intel was the iAPX 432, a very ambitious CPU that was intended to be Intel's major design for the 1980s. The intention was to build a chip very well suited to the high level languages of the time, such as ADA, by offering niceties like object-oriented programming and storage allocation in hardware. A very ambitious design that would take years to mature, so Intel launched a stop-gap design in 1976: the 8086.

The rest is history: the 8086 family won the IBM PC contract, while the iAPX 432 was introduced very late, in 1981, and with very disappointing performances. In benchmarks, it performed at ¼ the speed of the much cheaper 80286 running at the same frequency.

The IBM PC 5150. The very first PC equipped with a 4.77MHz Intel 8088

The IBM PC 5150. The very first PC equipped with a 4.77MHz Intel 8088

Needless to say, the 432 did not sell very well…

In 1982, Intel still did not recognize the importance of the PC platform and the binary compatibility with its software. The 80286 was another gap-filler CPU and had a slow start, so Intel was not very keen to pour more money into yet another x86.

By that time, Intel engineers finally understood that the 432 line was doomed; they began to work on a brand new 32-bit RISC architecture code-named P7.

In the meantime Bob Childs, one of the architects of the 286, worked underground to lay out some ideas of what could be a 32-bit extension to the 286. After about six months, Intel knew that it would need another iteration in the x86 family before the P7 was ready, and the work on the 386 got the green light; albeit with a very small team and on a shoestring. The team conducted extensive research about the needs of the x86 customers and it quickly appeared that everyone hated the segmented memory scheme of the 8086, and that they regretted that the 80286 was a missed opportunity to get rid of it. UNIX was also becoming a thing in more affordable workstations, and the 386 team wanted to design a chip well suited for it.

Thus, being able to address a “flat memory” space was considered top priority. In order to help keep compatibility with prior x86, the 386 would be still segmented. But as each segment could be 4GiB long, it became irrelevant. Paging and the ability to provide virtual memory was also decided around that time. The address unit was very carefully designed to play well with the pipeline so the address computation would not impact performance. This was very beneficial to the 386, as we will see later on.

Early-on, it was also decided that the 386 would be a full 32-bit CPU. In order to preserve the binary compatibility, the existing instruction set and registers were simply extended to 32-bits, instead of designing a very different instruction set behind a “mode header”. The price to pay was that the tiny number of registers, a drawback of the x86 CPUs, could not be increased.

For a time, Intel considered using the brand new bus that was designed for the P7. However, it was very different from the 286’s bus and would require an extensive redesign of the motherboards and the support chips. So it was abandoned in favor of a less ambitious 32-bit extension of the existing bus.

Around 1984, the PC market was blossoming and Intel finally understood the importance of the x86 line and the completion of the 80386 became top-priority. As stated by Jim Slager, from the original team:

[…] probably over a 12-month period we went from stepchild to king. It was amazing because the money started pouring in from the PC world and just changed everything

In order not to steal sales from the 80386, P7 project, which will give birth to the i960 in 1988, was retargeted to the embedded market.

The Intel i960

The Intel i960

Sole sourcing the 386

The 386 was introduced in October of 1985.

Back in the time, it was common that chip designing companies such as Intel provided licenses for other companies to produce their CPUs in order to “second source” them. It was very important to win computer designs as the customer could be confident that in case of catastrophic yields from the “primary source”, they would not suffer a shortage of CPUs. Intel had a long partnership with AMD, dating back to the 8085. AMD and Intel cross-licensed their products, so each one could be the second source of the other one. Starting from 1983, IBM itself had a license to directly produce 808x and 80286 chips at the condition they still bought some CPUs from Intel and did not sell their production to third parties.

But with the 80386, everything was about to change.

In 1984, IBM introduced the model 5170 also known as the PC/AT. Its main plus-value compared to its predecessor was its 286 CPU. Intel understood that if it could impose itself as the sole source of the CPUs it could have full control of the most valuable part of the PCs.

The IBM 5170 “PC/AT”

The IBM 5170 “PC/AT”

As IBM was not much interested in the future 386 and wanted to invest massively into the 286 instead, an opportunity arose. IBM preferred to produce as many CPUs for their own usage as possible, only keeping Intel as an insurance in case of production troubles. The agreement between the two companies was renegotiated to please IBM concerning the 286, but it would not be permitted to produce 386 chips.

In 1985, without the support from IBM which was entirely focused on the 286, the prospects for the 386 were not rosy. AMD was not very interested in producing it, so the second sourcing agreement with them was not extended to the 386.

Thus, Intel became the sole source of 386 chips!

Around the same time, judges ruled in a NEC vs Intel case that the micro-code was copyrighted and that nobody could copy it without a specific license from Intel. If NEC was able to reverse the 8086’s microcode, the 386 would be nearly impossible due to its complexity.

From that point, Intel was in position to control the future of the PC market, along with Microsoft.

The Clone Wars

When IBM designed the PC, it was in a hurry. It could not wait too long before introducing its micro-computer line or it risked missing the market. Thus, instead of spending years designing every single component necessary to build it, it relied on off the shelf parts. On the plus side, the IBM PC could be commercialized very quickly. On the other hand, everyone could build a compatible machine by buying the same parts…

The most successful and ambitious of those clone companies was Compaq. At the time, it was the fastest company ever to reach $100 million in revenue. Still a dwarf compared to IBM, but a resourceful company nonetheless.

In September 1986, almost a full year after Intel launched the 80386, Compaq introduced the Deskpro 386. This first 386 computer was also the very first PC that did not follow the lead of IBM, which was trying to regain the control of the PC market with its 286 based PC/AT.

The impact was immense.

The Compaq Deskpro 386, model 2570

The Compaq Deskpro 386, model 2570

According to Bill Gates:

A big milestone [in the history of the personal computer industry] was that the folks at IBM didn't trust the 386. They didn't think it would get done. So we encouraged Compaq to go ahead and just do a 386 machine. That was the first time people started to get a sense that it wasn't just IBM setting the standards, that this industry had a life of its own, and that companies like Compaq and Intel were in there doing new things that people should pay attention to.

While the Compaq Deskpro 386 was initially very expensive, its sales were honorable. More than that, it meant that IBM was not in the lead seat anymore.

It will take IBM almost one year to propose their first 386 computer, the PS/2 model 80. The PS/2 line tried to regain control by introducing a new proprietary bus, which was really advanced for the time, almost on-par with the later PCI bus. But IBM was not in position to impose its views anymore, and the PS/2 line would never fulfill this mission.

The clones had won.

The 386 against competition

In 1985, the main challengers to the Intel x86 line were the 680x0. Most people, even at Intel, considered the 68000 as a very superior chip to the 8086, and that the 80286 was a missed opportunity to evolve towards a clean architecture. Meanwhile, the 68020 was the natural 32-bit evolution of Motorola’s CPUs.

And while the 68K was chosen for many designs, in micro-computing but also for workstation or electronic appliances, the x86 were not very successful outside the PC market.

With the 80386, Intel finally had a serious contender.

It could even target the lucrative workstation market, with its integrated MMU and its 4GiB flat address space. But times were changing, and the workstation market, Intel and Mororola were not alone anymore and many companies were introducing RISC designs to power their workstations.

RISC was indeed really compelling, promising to be faster and cheaper to produce. Whereas the 386 was a very “CISCy” design, relying on a fat microcode to handle the complex x86 instruction set, in particular the very peculiar 80286 addressing modes.

In the benchmarks, the 386 was an average performer.

| CPU | Frequency (MHz) | Introduction of the CPU | MIPS (claimed) |

|---|---|---|---|

| Intel 80286 | 16 | 1982 | 2.5 |

| Intel 80386 | 16 | 1985 | 4 |

| Intel 80386 | 25 | 1985 | 6 |

| Intel i960CA* | 33 | 1990 | 66 |

| Motorola 68020 | 16 | 1984 | 2 |

| Motorola 68030 | 25 | 1987 | 6 |

| Motorola 88000 | 16 | 1988 | 17 |

| Mips R2000 | 16 | 1986 | 16 |

| Sun SPARC | 16.7 | 1986 | 10 |

| Acorn ARM2 | 8 | 1987 | 4.5 |

Source: http://www.roylongbottom.org.uk/mips.htm\ (*) The i960 is the CPU resulting from the “P7” project, which competed with the 386 to become the main 32-bit architecture at Intel

But at least it had the features required to compete on a level-ground.

Now fully committed to the 386, Intel introduced the 386SX in 1988. This cheaper version was internally identical to the original 386, renamed 386DX. But it only had a 16-bit external data bus and came in a cheap plastic package. As it could be plugged in cheap 16-bit motherboards, its main goal was to replace the similarly priced 286, for which there were still second source providers…

In the early 90s, the 386 park was large enough that more and more software providers could take advantage of its 32-bitness and modern feature set.

Because the most important thing was that every 386, even the low end ones, came with an integrated MMU.

The real importance of the 386

What is a MMU?

MMU stands for Memory Management Unit.

Early programs could see the whole address space of the machine. It was manageable as it was a tiny space. Programs could also write anywhere, except on a small ROM area hosting the few functions provided by the (primitive) “system”. But as the address space became bigger and the underlying systems evolved, many programs could run simultaneously.

As the operating system could no longer be served from a slow and tiny ROM, how could it isolate its code from the programs? And how could it separate the programs from each other? Another problem was that 16-bit allows only addressing 64KWords of memory. How could larger addresses be efficiently computed?

One of the early answers was segmentation. One register will serve as the base address of the segment. When a program accesses an address, its 16-bit value is automatically added to the segment register to form the physical address, which is then emitted on the address bus. A limit can be set to define the segment’s size. If a program tries to access beyond it, a “page fault” is emitted. The programs are thus effectively isolated one from each-other. Segmentation was the design choice for the 8086 memory accesses.

A Memory Management Unit, a.k.a MMU, is a piece of hardware that will automatically handle memory translation from a “virtual address” to a physical address. We could say that the 8086 integrated hardware supporting the segmentation is a kind of primitive, and limited, MMU. The Motorola 68000 and 68010 could be paired with an external MMU, the MC68451, that also relied on segmentation schemes to translate addresses and isolate programs.

The Motorola MC68451

The Motorola MC68451

The 80286 came with a feature-rich segmented MMU, allowing a much more complex memory management than the 8086 and able to access up to 16MiB of memory. OS2 1.x, intended to be the successor of MS-DOS on PCs, made good use of this MMU to provide a more modern experience.

But as PC programmers knew very well, segmented memory has limitations and can be a pain to manage. So other memory management schemes were devised.

A more modern way to design a MMU is around pagination. The MMU divides the virtual address space into pages of fixed sizes. When a program accesses a location inside a page, the MMU reads a page descriptor that contains all the information required to do the translation to the physical address. This page descriptor is usually also located in memory, so in order to stay fast enough, paged based MMU have a small cache, the TLB, containing the last descriptors that were accessed. In most cases, it is enough to keep excellent performances.

Very simple illustration of an integrated paged MMU

A MMU works in close relationship with the operating system which configures it and handles the page faults. The nature and feature set of a MMU is important to address the expectations of the OSes. While OS/2 1.x was designed around the segmented schemes supported by the 286, most other modern OSes ran better with pagination.

Such was the case with many ports of UNIX, although it was not mandatory. 68000 based workstations, such as SUN’s, ditched the MC68451 and came with a custom paginated external MMU. In order to recapture this market, Motorola introduced its own external MMU with pagination support, the 68851, to accompany their new 68020.

And what about Intel? As it decided to make the 80386 a good match for modern UNIX, the same kind of MMU was also mandatory. The difference with the 68020 was that it came integrated into the 386. This changed everything!

The 80386 MMU

The 386 had to solve two problems: be suitable for modern OSes such as UNIX, while staying compatible with the 8086, the 80286 and all their existing and future programs and OSes. That meant that the 386’s MMU would have to support the complex paginated schemes introduced by the 286 while offering pagination, required by modern OSes.

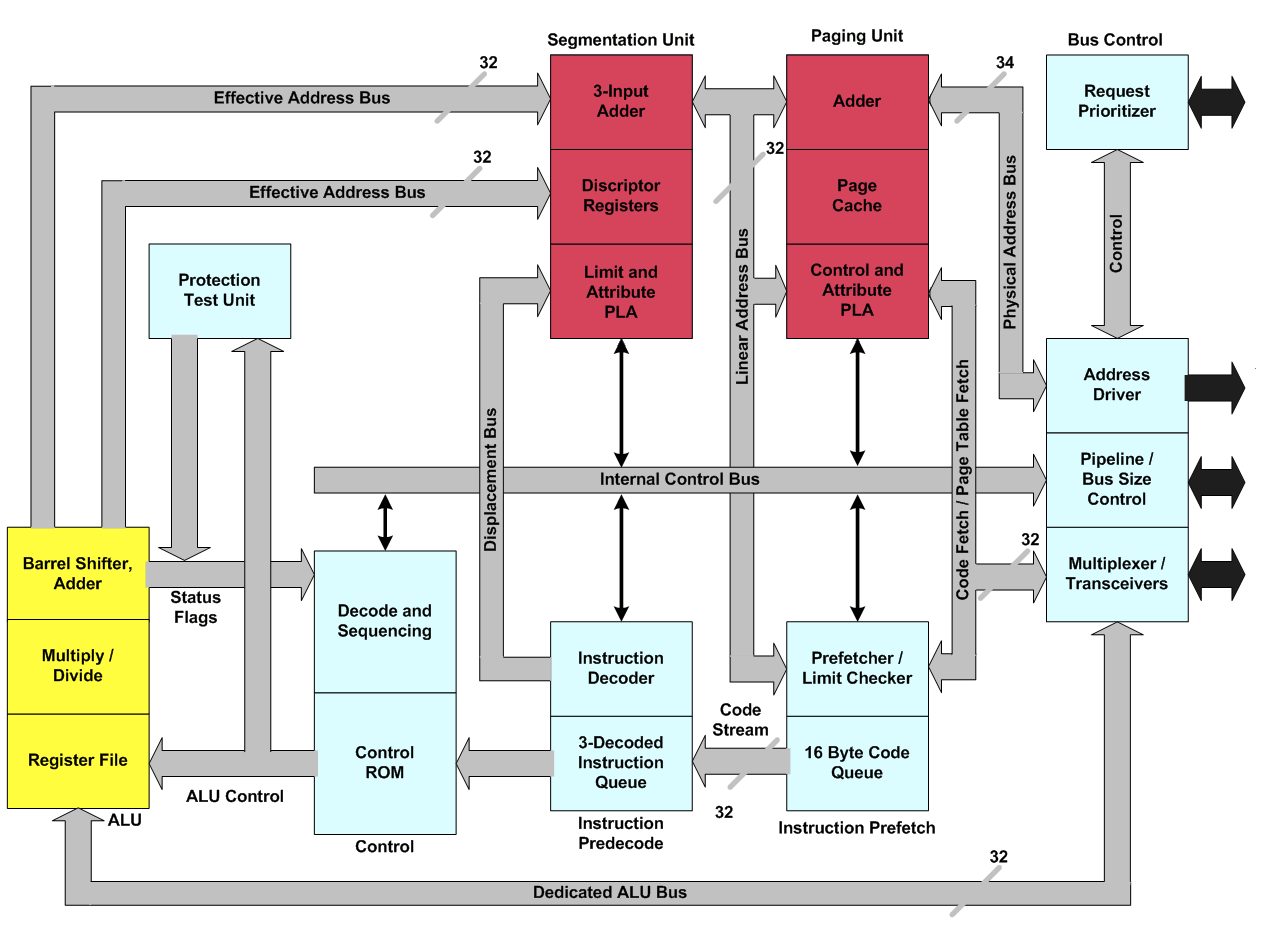

In order to overcome the challenge, the 386’s MMU is made of two units that are almost two distinct MMUs, one for the paginated mode and one for the segmented mode. These two units are cascaded: first the logical address goes through the segmentation unit that uses the segment descriptor to compute the linear address. If paging is not enabled, this address is the physical address and the paging unit doesn’t modify it. Otherwise, the address is still considered as a logical one, and the corresponding page descriptor is retrieved from the TLB or memory and the real physical address is generated.

Internal architecture of the 386: the two almost independent MMU units are apparent

Internal architecture of the 386: the two almost independent MMU units are apparent

The segmentation unit cannot be deactivated, but the whole memory space can be represented with a 4GiB segment register beginning at the address 0, providing the equivalent of a flat memory space. The paging unit divides segments into 4KiB pages. A control block checks the privilege at the page level. Indeed, the 80386 introduces four privilege levels, called rings, that are used to protect “privileged” memory from “unprivileged” reads / writes. This is another basic block on which modern protected OSes build from.

The MMU is also involved in the “Virtual 8086” operating mode, which is a kind of hardware supported virtual machine to run 8086 programs in real mode. These programs have the illusion of having full control of an 8086, with up to one MiB of memory. Many V86 VMs can operate at the same time, with their virtual space translated to physical space by the MMU. If they try to operate on protected resources, such as accessing MMIOs, an interrupt is triggered that will be handled by the privileged software managing the VMs.

The MMU was designed to be an integral and streamlined part of the 80386. Under ideal conditions memory accesses are not impacted, on the contrary to the MC68851 that always introduces at least one cycle of latency.

In the 68000 line, the MMU is not always present. We have seen that the 68020 had to be supported by an external chip, while the low cost “EC” versions of the 68030 and even the 68040 did not come with the integrated MMU. On the contrary, the MMU was always included inside all models of 80386, even the low cost 386SX. This made a huge difference, as programs taking advantage of the MMU and advanced operating modes could run on any 386.

New OSes

More than raw power, the 386 paved the way to new modern OSes that could run for the first time on an x86 personal computer.

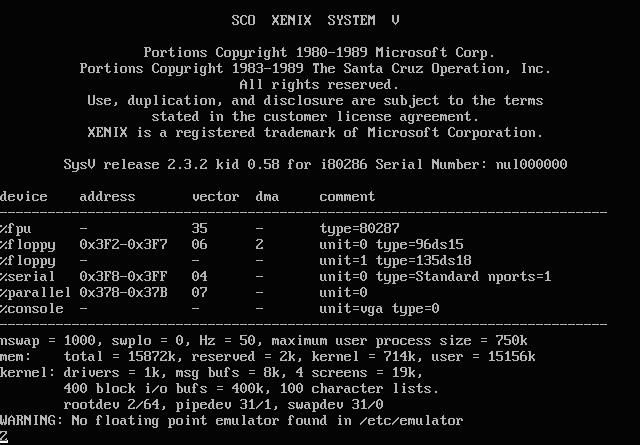

Xenix

Xenix was one of the first UNIX ports for micro-computers, born from a partnership between Microsoft and SCO. In 1980 a port for the 8086 was announced. But without a true MMU, it could not protect the memory so user-space was not separated from kernel-space. A better 286 version took advantage of the “protected mode” of operation to behave more like its brethren running on workstations. And in 1987 a port to the 386 closed the gap by making use of pagination and became the first modern 32-bit OS to run on an x86.

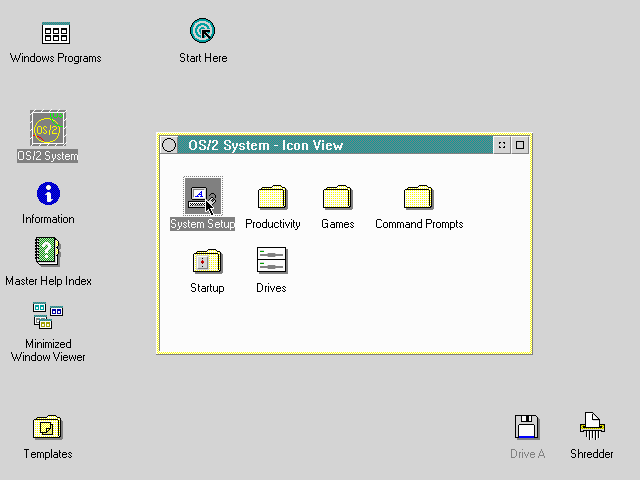

OS/2

OS/2 was at first a joint development of Microsoft and IBM, before Microsoft left it to focus exclusively on Windows. Although released in 1987, the first versions targeted the 286 as it was primarily intended for the PS/2 line of IBM computers, most of them sporting this CPU. It made good usage of the protected mode and was considered as an advanced OS for the time. But it is only with version 2.0, in 1992, that OS/2 became a 32-bit OS. In the meantime, it suffered from the segmented memory scheme and the lack of Virtual 8086 mode: its support of uttermost important DOS applications was not good and was lagging even against Windows/386.

Nevertheless, OS/2 2.0 was the first widely used 32-bit OS on a personal computer.

OS/2 2.0

OS/2 2.0

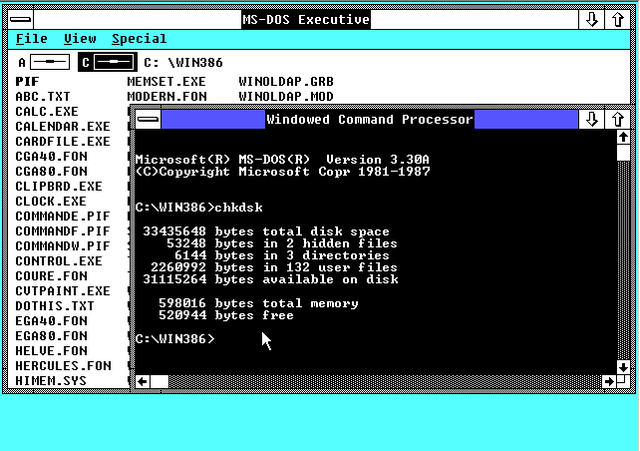

Windows

Windows began providing an “operating environment” specific to the 80386 as soon as 1987 with Windows/386, quickly followed by the 2.0 release. It was still a 16-bit OS and did not expose the 32-bit flat memory space, but it made good use of the 386 to virtualize DOS sessions into the Virtual 8086 mode. Those sessions could run in parallel without being aware of the other ones. This was critical for many businesses still relying on DOS software for their day to day operations. And thanks to the MMU, extended memory was also provided through a protected mode driver emulating EMS.

This was of course refined by Windows 3.0 and Windows 3.1, which was a tremendous success. In 1993, Windows for Workgroups 3.11 dropped support for anything lesser than the 386 and file access and many drivers were now 32-bit.

Windows/386, first version of Windows supporting the features of the 386

Windows/386, first version of Windows supporting the features of the 386

The transition was finally completed by Windows 95, which made full usage of the 386 MMU, exposing the flat memory space, making use of paging and virtual memory and so on… Some code was still 16-bit, but DOS was reduced to little more than a bootloader and it gave the taste of a modern fully fledged 32-bit system to millions of users.

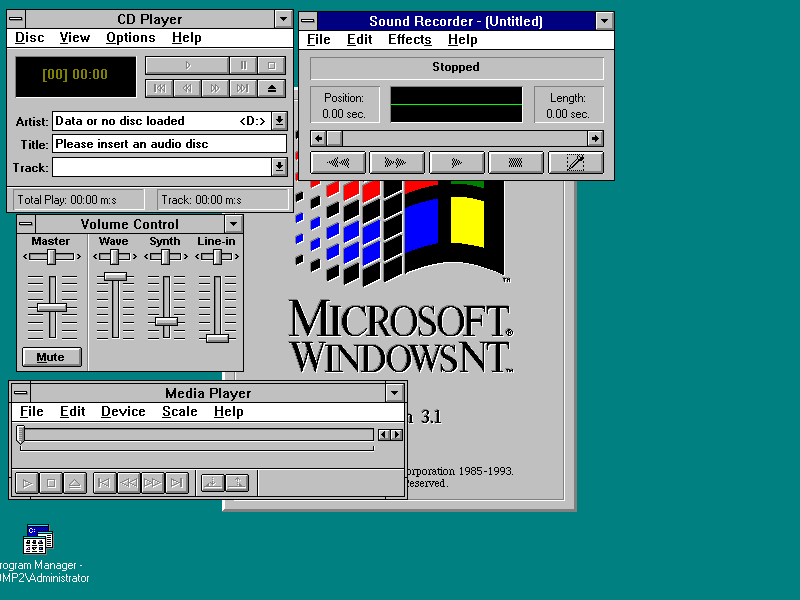

NT

The first version of Windows NT was released in July 1993. Whereas Windows 95 grew from Windows 3.11 and kept some legacy 16-bit code, NT was developed from a clean slate to become the first “pure” 32-bit version of Windows. Although one of its main features was hardware independence, the most important port was of course for the 80386 and made full usage of pagination, supervisor mode and memory protection. Sharing almost the same API that Windows 95, NT could run countless Win32 applications while being as robust as any OS could be at this time. It had tremendous success and Windows NT4 started to make a dent in the Workstation *NIX market of the mid-90s. Today’s Windows 11 is its direct descendant and still runs on the NT kernel.

*Windows NT made its debut with a 32-bit version of “Program Manager” as its shell

*Windows NT made its debut with a 32-bit version of “Program Manager” as its shell

Windows NT 3.1

Linux

But the most important OS born on a 386 is none of those above.

The prodigal OS is Linux, whose birth is intimately linked to the 386. Around January 1991, Linus Torvalds, a Finnish student, bought a brand new 386 PC.

Like any good computer purist raised on a 68008 chip, I despised PCs. But when the 386 came out in 1986, PCs started to look, well, attractive. They were able to do everything the 68020 did, and by 1990, mass-market production and the introduction of inexpensive clones would make them a great deal cheaper. I was very money-conscious because I didn’t have any.

This machine was a good match to run Minix, a small UNIX clone designed to teach the inner side of operating systems to students. But Linus was not satisfied by the terminal emulator of Minix. The need for a good one was crucial to him, as he used it to connect to the computers at university. So Linus started to write his own terminal emulation program, bare-metal in order to also learn how the 386 hardware worked. His terminal could read the keyboard and of course also display text on the monitor. He designed it around two independent threads, so he had to write a small “task switcher”.

A 386 had hardware to support this process. I found it was a cool idea.

As Linus wanted to download programs, he had to write a disk driver to store them. That’s how, one functionality after another, what would later be known as Linux was on its way to becoming a fully fledged operating system. Mid-91 Linus asked for a copy of the POSIX specifications and on the 25th of August 1991 he announced on the newsgroup comp.os.minix that he was working on a new operating system: “just a hobby, won’t be big and professional like gnu”.

The rest is history.

Conclusion

The 80386 really is the most important CPU of the x86 line.

Technically, the 80386 was a fine chip. Performance wise, it was nothing groundbreaking and behind its RISC contemporaries. But it was very feature complete with its modern and fast MMU and its different modes of operation which allowed it to access 4GiB of flat memory while staying compatible with all software written for the x86. This allowed Windows to gently transition into modernity. Actually, the 386’s abilities regarding memory handling were good enough so that its successors, while becoming ever more powerful, did not add anything major in this regard for almost 20 years.

The main branch of Linux only dropped i386 support in 2013!

But it was commercially that the 386 had the most importance. While Intel did not initially believe that there was demand for a 32-bit x86, they realized that the x86 was their future. This change of stance convinced the market that the x86 was here to stay. The adoption of the 386 by the major cloners put IBM aside and demonstrated that there was a credible and open alternative. Meanwhile, Intel became the only source of the most powerful x86 CPU, a major step in its journey to dominate the CPU market.

Mass produced, the prices quickly fell and the 386 based machines became more affordable than any other, and democratized the access to a MMU. Making good use of those abilities, Windows introduced millions of people to modern computing, the NT kernel demonstrated that a robust OS could run on cheap “beige” PC, while we can say with confidence that Linux would not exist without the 386.

That is why I say that the 80386 is the most important chip ever designed by Intel.

Hail to the 386!