Yes, the SEGA Saturn was designed with 3D in mind

There is a widespread belief stating that the SEGA Saturn is a 2D power-house, but not very good at 3D because those capabilities were added as an afterthought.

This article will try to demonstrate that, on the contrary, SEGA was very serious about 3D and that the Saturn was engineered to be very capable in this domain. Mistakes were made, but not because the engineers did not look at 3D seriously or were incompetents.

The dawn of the 3D era

The principles to display 3D images on computer screens were well known as early as the late 70s. Two main paths are possible.

- Raytracing, that simulates the physical property of light and its interactions with matter. The algorithms behind raytracing are relatively simple. But the computational requirements to produce real time images were way beyond anything until very recently. It is irrelevant for the remainder of this article.

-

Rasterization is a collection of tricks that tries to approximate a realistic color for the pixels displayed on the screen. The quality of the approximation will depend on the computing power available and the complexity and number of algorithms that can be used to compute the pixel.

Pre-5th generation hardware

The so-called 5th generation of game consoles was the very first to generalize hardware dedicated to help producing rasterized 3D images. Before that period, 3D was entirely computed in software, using progressively more complex algorithms as the machines became more and more powerful.

Home computers were well suited for 3D, as they benefit from a frame-buffer. This is a memory location that contains the data describing an entire frame and can be both written by the program and read by the video circuitry. It is possible to compute the value of each pixel and store it into the frame-buffer.

Hunter on Amiga (source: Wikipedia)

Hunter on Amiga (source: Wikipedia)

By contrast, 8-bit and 16-bit game consoles were designed to be as efficient as possible when running 2D scrolling games. In order to spare expensive memory they did not have any frame-buffer. Instead, they generated graphics using tiles and tile-maps. A screen is composed of tiles of fixed size, indexed from a dictionary called a tile-set. In perfect synchronization with the position of the electron beam of the CRT, the hardware could compute the color of a pixel going from the tile-map, then the tile, then the pixel. No frame-buffer was needed.

But 3D graphics do not work like 2D and could not be encoded using a pre-defined set of tiles. In order to render 3D, a programmer had to compute the scene using the limited memory of the machine and produce the corresponding tile-set and tile-map on the fly. Possible, but cumbersome and very time consuming. That is why consoles were not very good at producing 3D without assisting hardware, embedded in their cartridge.

The SuperFX is a dedicated RISC CPU embedded in the cartridge (source Wikipedia)

The SuperFX is a dedicated RISC CPU embedded in the cartridge (source Wikipedia)

How to render a true 3D image?

About false 3D

If there is true 3D, there must be false 3D, right?

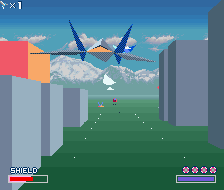

Indeed, what this article considers a “false 3D” engine uses a lot of tricks to give the illusion of 3D but always has limitations. Obvious examples are all the games using zoomed sprites such as Outrun or Afterburner. We play in a pseudo-3D world and we cannot circle around the objects or make a U-turn.

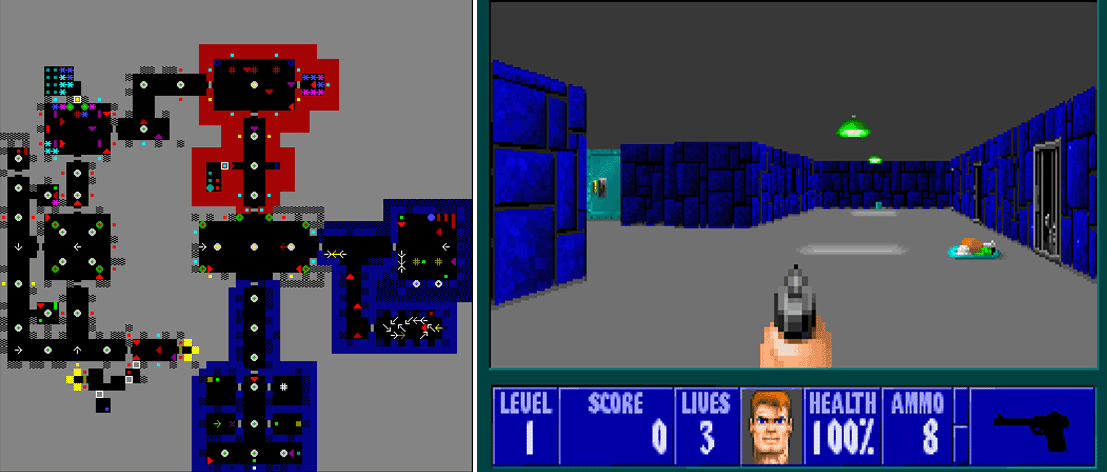

Less obvious: Wolfenstein 3D and Doom. Wolfenstein 3D uses a clever technique called Raycasting. But there can be no difference of altitude inside a single level, and all the walls and corridors must be positioned in a perpendicular fashion. In fact, Wolfenstein levels were edited by a simple evolution of Commander Keen’s level editor. Doom goes way farther to give the illusion of 3D. But there are still several limitations, such as the impossibility to have two sections on top of each other.

Ultima Underworld and Quake are true 3D games that do not suffer such limitations, even if all the characters and items are still zoomed sprites in the former one.

The 1st level of Wolfenstein3D: a 2D game at heart, faking a 3D point of vue

The 1st level of Wolfenstein3D: a 2D game at heart, faking a 3D point of vue

3D techniques

By contrast, a true 3D engine does not limit the game in what concerns the shape or layout of the level and objects, nor the position of the point of vue. The limited power of the machines will limit the fidelity of the rendition of the scene, but as time passed, more and more precise rendition became possible.

The basis of the rendition of any true 3D image is the vertex. This is a point in space. Any object in a 3D scene can be represented by a collection of such points. The problem is: given some objects in a scene, and a camera pointing at them, what will be the position of the vertices on the screen? It can be solved by projecting the visible 3D space into the plane of the screen.

A parallelogram described by 6 vertices

3D cube projected on the screen plane (source Wikipedia)

For each point, this requires a lot of trigonometric calculations that can be efficiently performed using matrices. Early computers lacked power and could only process a handful of vertices per frame, and only a few frames per second.

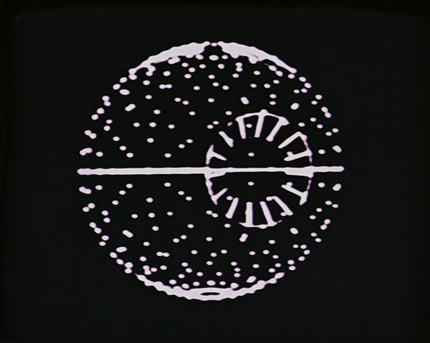

A famous spherical object drawn with vertices

A famous spherical object drawn with vertices

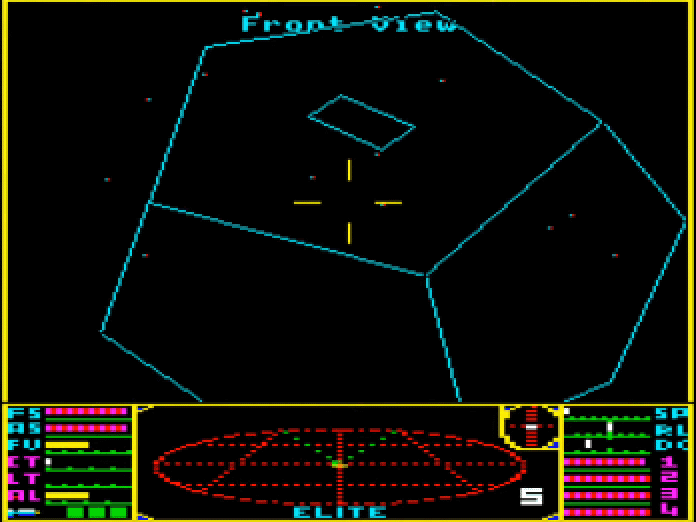

Once we have the position of the vertices on the screen, we can add more fancy stuff. The very first will be to link the vertices by lines to draw the shape of the objects, resulting in wire-frames.

Elite on BBC Micro

Elite on BBC Micro

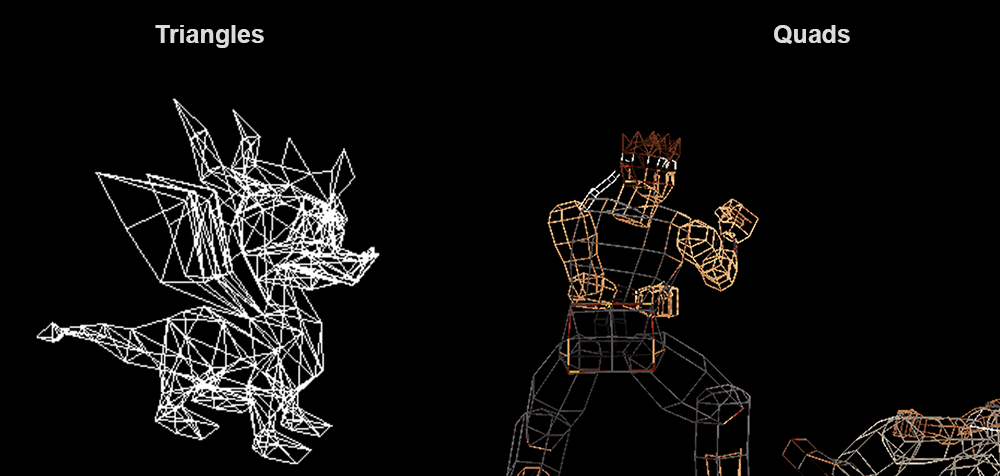

For more complex scenes, we have to group them to form the basic components of any 3D object faces. The most common way of grouping vertices is by forming triangles.

By combining triangular faces, we can approximate any shape. And they have the advantage that all the vertices of a triangle lay on a single plane. But we can also form faces by using a group of four vertices, forming a quadrilateral or quad. They can also be used to approximate every shape… except triangles! But they have the advantage that less quads are required to approximate curved surfaces.

Wireframe models of Spyro on PlayStation and Virtual Fighters on Saturn (source: Copetti)

Wireframe models of Spyro on PlayStation and Virtual Fighters on Saturn (source: Copetti)

With a more powerful computer, we can remove the hidden wire-frames.

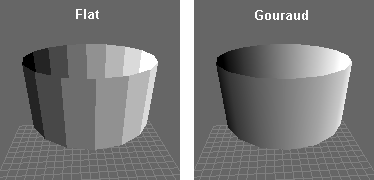

We can also fill the visible faces with a unique color, leading to the now called flat shaded 3D. We can add a directional light that will modify the color of the surfaces. Computing the correct color that takes lighting into account requires some serious computing power, while setting all the pixels of the faces requires enough memory bandwidth.

The next step will be to compute a more realistic shading of the faces. We can achieve an interesting result by computing the color of each vertex of a face, then interpolate between them so that each pixel of the face will have a different color. This will smooth the surfaces and allows a first approximation of specular highlights on curved surfaces. One of the most popular algorithms is called Gouraud shading.

There are the same number of faces, but Gouraud shading smooths them

There are the same number of faces, but Gouraud shading smooths them

Finally, we can add details to each surface by filling it with a texture. A texture is a bitmap image that is used to fill faces. It can work in combination with Gouraud shading, which will then modify the color of the texture depending on the pixel position relative to the vertices of its face. Using a texture to “colorize” an object is called texture mapping.

In order to correctly map the texture, we must retrieve the correct texture elements, a.k.a texels (meaning pixels from the texture), in order to compute the correct color for the corresponding pixel on the screen. Once again, this requires a large amount of computing resources.

And here we are. In 1994, when the Saturn hit the Japanese market, a powerful PC could compute everything up to this point; with a reasonable speed, entirely in software. But a powerful PC was expensive, and there was no way to put a Pentium or even a 486 in a home console for a reasonable price.

And as you can see, even on a beefy and expensive PC of 1993, the game does not offer a lot of faces nor textures. It’s a bit un-unimpressive. That is how costly it was to do 3D in pure software.

And that is why the 5th generation consoles were designed with features to accelerate 3D. Yes, even the Saturn 😉

The architecture of the Saturn

The Saturn comes with a CDROM, advanced audio capabilities and other novelties from its generation. Here, I will only discuss the components used to generate the frames.

CPU

The SEGA Saturn is built around two SH2 processors from Hitachi, each with a frequency of 28.6MHz. I will talk later about the difficulties to program for these two almost symmetrical CPUs.

The SH2 was a capable processor for its time. It is an evolution of the SH1, which Hitachi failed to sell to any big customer. So when the need for SEGA arose, with the prospect of supplying tens of millions of units, Hitachi knew that it could not miss this opportunity. It convinced SEGA that the SH1 was able to fulfill the need, but the SH1 was a little weak when performing multiplications on 32-bit numbers. Its design was thus modified to enhance its multiplier, and the new revision was christened SH2.

Two notes here.

32 bit multiplications are of little use for 2D games. SEGA clearly had 3D in mind.

Some claim that the SH2 was a poor CPU. This is not the case, as illustrated below.

Synthetic benchmark of various CPU at 25MHz (source Microprocessor Report 1994/11/14)

For reference, the PlayStation CPU is a 33,8MHz R3000. It is faster than a Saturn’s SH2, but they play in the same league. Nevertheless, it is true that when SONY unveiled its console, SEGA added a second SHOn paper, the Saturn now packed more CPU power than the PlayStation.

The two SH2s do not play the exact same role in the console. One is the MAIN. It is designed to send programs to the SECONDARY one that acts more like a co-processor. As they share access to some resources such as memory, it is difficult to make them work together without having each one impede the other one. More on that later.

DSP

As we saw earlier, 3D processing generally relies on matrix operations. This means a lot of multiplications and additions. Even with its two SH2, the Saturn would not be powerful enough for the 3D graphics envisioned by SEGA without its DSP. A DSP is a kind of microchip that is weak in running “general purpose” code, but is king when adding and multiplying numbers in tight loops. Just the kind of operation that is useful to manipulate and project vertices from 3D space to 2D space 😉

Like the secondary SH2, the DSP acts like a coprocessor.

VDPs

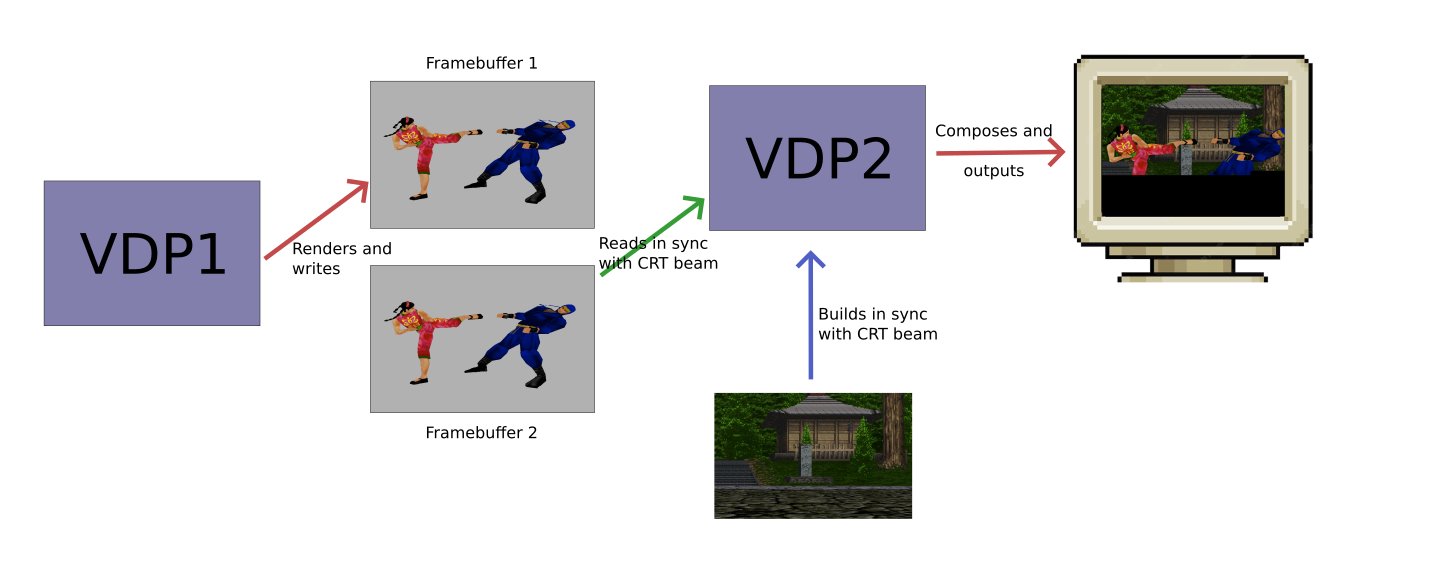

Finally, the Saturn sports two “Video Display Processors”, a.k.a VDP1 and VDP2.

VDP1, just as its contemporaries graphics coprocessors (the term GPU was not coined yet), is configured by a lot of registers and is “hard-coded” to process a list of commands from the main CPU.

It is built around the concept of “distorted sprites”, a term that appears in the documentation by SEGA itself. And this denomination may be the main cause of a common misunderstanding about the Saturn: that all of this was a hack, devised lately during the engineering process to give the console some pseudo-3D capabilities.

A “distorted sprite” is a “2D quadrilateral that can be of any size or shape. It can be filled with a single color or by an image that will be deformed to fit. As we saw earlier, quads are a viable way to approximate objects. As VDP1 can draw thousands of them each frame, it is enough to compete with any other machine of the time.

![]() The concept of “distorted sprite”, as illustrated in the VDP1 User’s Manual

The concept of “distorted sprite”, as illustrated in the VDP1 User’s Manual

But VDP1 only processes 2D coordinates. So the 3D vertices have to be projected to the 2D plane of the screen in order to define the quads it has to draw: a perfect job for the DSP!

Gouraud shading can be optionally applied per quad. As we saw, Gouraud was an advanced technique at the time to modify the coloring of each pixel of the quad, based on information carried by each of the four vertices. It’s very useful to simulate the local lighting on the face, and mostly dedicated to 3D.

VDP1’s output is written to a real frame-buffer in a dedicated VRAM. It is large enough so that the games can do “double buffering”. A technique, still in use today, with which VDP1 can write in a buffer, while the next stage reads the other buffer, which was written during the previous frame. With doubler buffering, there is no risk of presenting an incomplete frame to the user.

Later in the article, I will discuss the advantages and disadvantages of using quads instead of triangles, as the PlayStation did.

VDP2 works like a 16-bit era tile engine, on steroids. Working with the same principles as the Megadrive’s VDP, it can produce several layers of large 16 million color “background”, composed of individual tiles. They can be zoomed, rotated or deformed at will and support transparency.

As its earlier counterpart, VDP2 produces images on the fly, in-sync with the CRT. It reads the frame-buffer, blends the pixels produced by VDP1 with its own layers to and sends the result to the display.

We could think that the VDP2 was clearly engineered with only 2D image generation in mind. It would be too restrictive. VDP2 can handle up to four 2D planes or two 3D planes. Those planes have depth information that will be used by VDP2 to correctly render the perspective. Something that neither the VDP1 nor the PlayStation can do! The way VDP2 generates its images one line at a time, makes those 3D planes somewhat similar to what clever programming could achieve with the SNES mode 7.

This tile-based nature allows the VP2 to fill the screen without taxing the system too much.

3D on the Saturn

Quads versus Triangles

Quads and triangles can be both used as a basic element to build any 3D shape. But they are not equals.

Triangles have the advantage that all their three vertices, and all the points between them, lay on the same 2D plane.

However, if the vertices are handled in simple and “primitive” fashion, triangles require more data to describe geometries than quads, impacting the performance. The simpler example would be… a quad! To draw a quad requires two triangles, six vertices. Whereas a quad based hardware of course only requires four vertices.

Another advantage of the quads is related to the way the PlayStation and the Saturn do texture mapping. Texture mapping consists in finding which texel of the texture will correspond to a pixel on the screen. Both the PlayStation and the Saturn are very limited in their texturing capabilities and lack any filtering whatsoever. This leads to these very characteristic “pixelated” textures.

Furthermore, the two consoles do not map the textures on the geometries in a correct fashion related to perspective. Indeed, they would need to take into account the distance of each displayed pixel related to the point of view of the screen.

But the Saturn and the PlayStation do not have this information any more at the texturing stage.So they only use a simple 2D “affine transformation”, to make it match the surfaces to be filled, as it is displayed on the screen, without taking into account the distance of the pixels from the viewer. This leads to inaccuracies that are more visible with triangles than with quads.

The screen-space affine transform results in a more pleasing result when using quads (source: Wikipedia)

The inaccuracies can be mitigated by using a lot of small triangles instead of a few big ones. The compromise is that multiplying the number of triangles is costly.

Forward texturing versus backward texturing

Perhaps the most “exotic” aspect of the Saturn is not that it produces geometries using quads, but the way they are textured.

Modern GPUs, and the PlayStation, use so-called backward texturing. For a given pixel to be displayed on the screen, the GPU will compute its originating triangle then the texel position in the texture. Each of the triangle vertices has an associated “texture coordinate”, pointing to the corresponding texel. For each of the triangle pixels that is displayed on the screen we can compute the right texel using those three texture coordinates. This system is very flexible and the texture coordinates can be defined outside the texture! If so, the triangle will be filled as if the texture was duplicated.

PlayStation: Only the texels that are required to fill the triangle will be read. The triangle can use any portion of the texture

The Saturn does the other way around, using a technique called forward texturing. Each quad is associated with a texture. But this time there is no texture coordinate associated with each of its vertices. The texture fills the quad in its entirety. In order to do that, the texture behaves like if it was deformed to be adapted to the quad shape, as it is displayed on screen.

Saturn: Every texel will be read at least once. The texture covers the whole quad.

The advantages of this technique are that it is simple to implement and the texture will be accessed in a linear order, which is very efficient. However, if the quad is small on screen, every texel will still be read then written to the framebuffer, causing many pixels to be overdrawn redundantly, wasting a lot of memory bandwidth.

The forward texturing of the Saturn is very simple and cheap in silicon to implement, but it brings a lot of disadvantages. Firstly, if you want to repeat a texture, to give the illusion of detail, you have to repeat the number of quads accordingly. This is not a free operation in terms of processing power.

Secondly, every texel will always lead to read / write operations. Even for small quads. That means that if a scene is built from a lot of small quads, a lot of memory bandwidth will be solicited to read/write all those texels, lowering performance.

About transparency

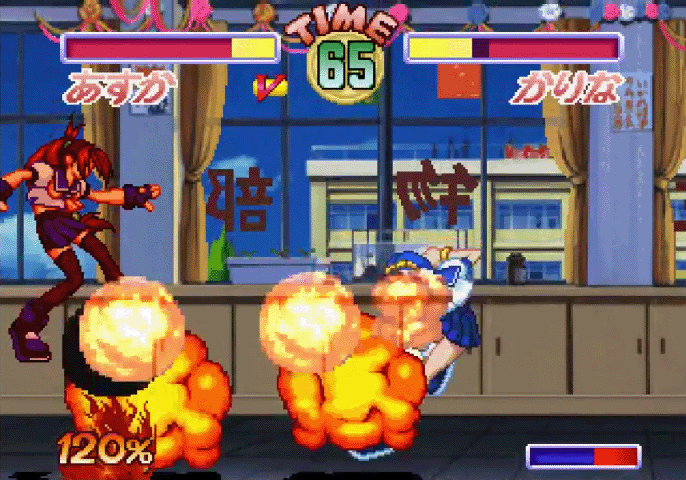

Forward texturing has another drawback: it impedes proper transparency support by VDPThe User Manual states that “there may be some pixels that are written twice, and therefore the results of half-transparent processing as well as other processing in color calculation cannot be guaranteed”.

Forward texture mapping can lead to a lot a quirks concerning transparency

Anyway, the whole transparency business is compromised by the way VDP1 constructs the frame-buffer. If two quads are overlapping, the latter quad will overwrite the data of the earlier one. That is why the developer has to carefully draw the quads from back to front: if they overlap, the closest will hide the farthest, which is quite natural.

But what if the latest drawn quad is marked as half-transparent? Its data will erase any prior data, and the transparency will reveal… the background layer drawn by VDP2!

Note how the half-transparent explosion data replaced the characters! (Source: Sega Saturn Graphic In-depth Investigations)

Note how the half-transparent explosion data replaced the characters! (Source: Sega Saturn Graphic In-depth Investigations)

That is why, in most games, transparent quads are mostly used to draw shadows on the ground, as it does not lead to this kind of glitches.

But VDP2 is able to produce proper transparency effects on its own layers. Good results could be achieved by carefully using both VDPs. But it was tricky and beyond the scope of this article.

That is the reason why, in most of the games, numerous transparency effects were simulated using dithering: one every two pixels is completely transparent.

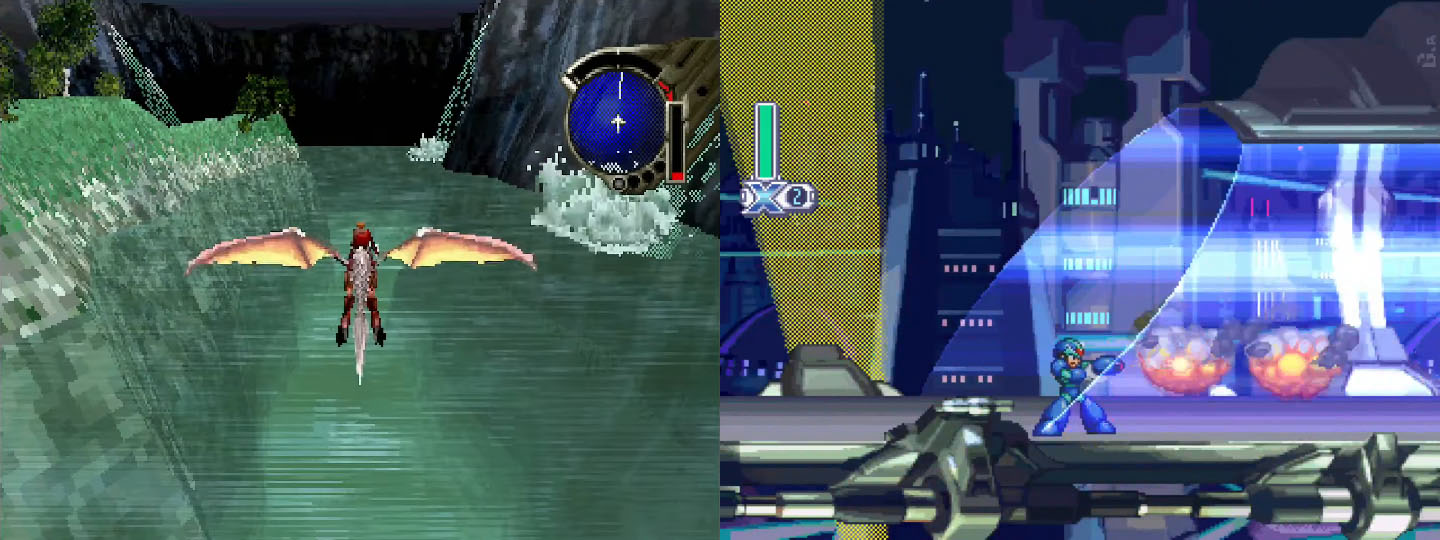

The Saturn can produce convincing transparency effects when working with both VDP1 and VDPOn the right, the transparency of the light is simulated by dithering.

The Saturn can produce convincing transparency effects when working with both VDP1 and VDPOn the right, the transparency of the light is simulated by dithering.

Programming for the Saturn is hard

The Saturn has the reputation to be a very hard platform to program for. Is it deserved?

Hell yes!

To begin with, in order to get the most out of the CPU performance, you have to split your code to run on the two SH2, in a parallel fashion. There are a lot of complexities due to the fact that they share a lot of resources, such as the memory ; although they both have a small internal cache, they cannot access memory at the same time. If the main CPU occupies the bus too much, the second CPU will stall.

Nowadays multicore CPUs are the norm, but it was not the case during the early 90s. Thus, the SH2 lacks a lot of features easing the cooperation between the two CPUs. Sharing data from one to another, requires to program a complex messaging system using interrupts and cache flushes.

That is why many programmers decoupled operations as much as possible and tended to use the second CPU as an independent co-processor. In Sonic R, for instance, highly reflective objects were entirely rendered in software, as VDP1 did not support “environment mapping”. This was a perfect task for the secondary SH2 that could fulfill it almost independently.

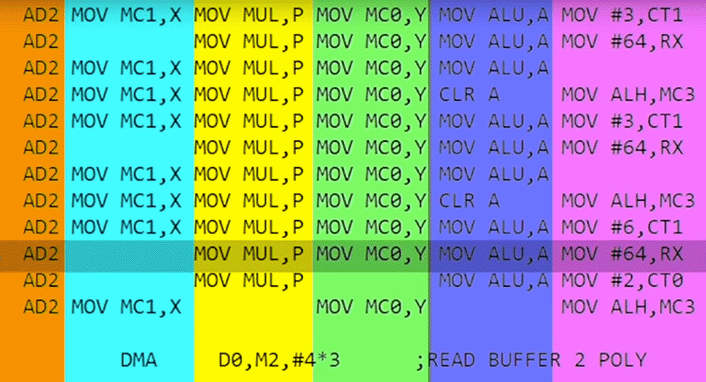

The DSP is perfect for high speed mathematical operations required to manipulate the vertices. The problem: it is up to the developer to write their program. This particular DSP is very powerful, but at the price of being difficult to program for.

Indeed, it can perform up to six instructions at once, if the right conditions are met. But the programmer has to explicitly write which operations will occur in parallel in the assembly code and of course not every combination is possible. Furthermore, he has to manually think about the latency of each operation. For instance, if an instruction performs a multiplication the result won’t necessarily be immediately available. If the following instruction tries to use it before completion, the value will be incorrect. But there won’t be any assembly error or warning: just a bug during runtime.

Assembly code for the DSP. Each colored column represents a stream of instructions executed in parallel (source: GameHut)

Assembly code for the DSP. Each colored column represents a stream of instructions executed in parallel (source: GameHut)

Then there are the VDPs. They must be manually configured by a lot of registers, VDP1 has a lot of limitations, and both must be used in perfect coordination to get the most of the console. This adds complexity to the driving software.

This complexity was a real problem for SEGA. Studios were struggling to transition from the 2D era to the 3D era. This was a huge shift and many did not survive. During the 8 and 16-bit era, developers had to know how to take advantage of the consoles. But now they had to be able to design bigger and more complex software, using techniques and languages that some never used nor learnt before. They also needed to have a sufficient mathematical background to manipulate objects in a 3D space, compute collisions, physics and so on, and perform correct projection onto 2D planes. Many autodidacts did not attend applied mathematics and computer science courses at the university and were struggling. The last thing they needed was to learn how to efficiently program a complex piece of hardware.

In an interview, Hideki Sato, who was in charge of the R\&D department at SEGA, admitted that the hardware was primarily targeting the internal development teams. 3rd party support was just an afterthought.

Sony did not have this problem as they started from a blank sheet and was aware that the 3rd parties would be key to its success. The architecture of its PlayStation is simpler and entirely dedicated to one thing: push as many textured triangles to the screen as possible. Its single MIPS processor is helped by the GTE, “Geometry Transform Engine”, to take care of geometry and lighting related calculations. Contrary to the Saturn’s DSP, it is not a fully programmable chip: it was hardcoded for its unique mission. The CPU gives it tasks via registers and commands loaded into memory which are closely related to the kind of task it has to accomplish. To simplify a bit: on the PlayStation you use the provided commands to tell the GTE what to do, while on the Saturn you have to program from scratch on the DSP the equivalent of these commands processing…

But most programmers did not even have to bother with the GTE, as Sony provided a powerful SDK (software development kit) from day one. A collection of functions that can be used from the game-code on the CPU to handle the low-level tasks.

Sometime later, SEGA also provided a SDK. Called SGL, it was very 3D oriented and provided a lot of high level functions.

Finally, even in the early 90s, almost all 3D software was already triangle and backward-mapping based. This was a big disadvantage for the Saturn as data produced by commercial softwares had to be converted to proper quads and textures before being used. Even worse, what an artist produced using these software, could not perfectly match the resulting object displayed by the Saturn. All of this added to the complexity and the cost of porting multi-platform games to the Saturn.

Conclusion

It is true that the SEGA Saturn was a very complex beast, hard to program for. But this is not the result of 3D capabilities being an afterthought, added in a hurry in reaction to the PlayStation. I hope this article demonstrated that many of its features were designed with good 3D rendering as a goal. SEGA had of course noticed the success of its own 3D arcade games, Virtua Fighters and Virtua Racing and knew that it was the way to the future. The main problem was that the engineering team lacked the experience about 3D gathered by the arcade team.

Quads are considered by some as proof that the technical capabilities of the Saturn were miserable out of the gate. But if triangles are now ubiquitous, such was not yet the case in the early 90s. SEGA’s engineers were not alone in making the decision to use quadrilaterals. Both the 3DO and the very first NVIDIA GPU, the NV1, made the same choice. Ten years earlier, the IRIS 2000 family of workstations from Silicon Graphics, were also manipulating quads. Furthermore, their next line of workstation used an off-the-shelf programmable chip from Weitek to handle the vertices and geometries processing. Which is an illustration that choosing a DSP for this task in the Saturn was not an isolated and silly choice. It was in fact simpler and cheaper than developing some dedicated hardware as Sony did with the GTE of the PlayStation.

The Saturn was of course far from ideal, with real deficiencies. In my opinion, more than the quad based rendering, it’s the way it does texture mapping that is its true Achilles heel. The lack of any consideration for depth when the quads are drawn is another big issue.

As always in engineering, these choices were the results of compromises and ultimately they fit quite well with this denomination of “distorted sprites”, which was certainly meaningful for developers used to 2D. SEGA clearly underestimated the speed at which everything would transition to 3D. Just as many others, they thought that 2D still would have a bright future for some years to come.

In the end, the SEGA Saturn is still more suited to 3D than most other 5th generation consoles. Everyone was aware that 3D was the future. But the processing power was barely there and it was a time of wild experimentation. The anomaly of this generation is Sony: it bet everything on 3D and on good third party support. Their PlayStation was spot on! It was very close to the best of what could be reasonably achieved in 1994.

In Japan, the Saturn performed better than the PlayStation during the first few years. Hardcore gamers were fully satisfied by the arcade games edited by SEGA. But Sony and its legion of 3rd party studios managed to expand the market to a new audience that was not seduced by SEGA’s offering.

And when Final Fantasy 7 hit the stores, it was clear that nothing would stop Sony’s rise to prominence.